If AI is so good, why isn’t my voice assistant more useful?

John Goddard | March 5th, 2025

Why it’s still hard to build great products in the age of AI…

Introduction

Here are some recent conversations where my voice assistant has struggled:

[Sitting down for dinner]

Me: “Alexa, play some soft jazz guitar music.”

[Silence]

Me (more clearly this time): “ALEXA… play some soft jazz guitar music.”

Alexa: “Sorry, that device is offline.”

[I stare at my plate, contemplating whether we have five or six music services connected to our Alexa, which is very much still online]

——————————————-

Me: “Alexa, close the curtains.”

Alexa: “There are multiple devices named curtains. Would you like to close living room curtains or curtains 5371?”

[Brief pause as I ponder this: we only have one set of curtains, and this command has always worked. I take a guess]

Me: “Living room curtains.”

[The curtains in our bedroom close]

———————————————-

Wife: “Alexa, what are the lyrics to Toxic by Britney Spears?”

Alexa (in typical voice assistant monotone): “Baby can’t you see, that I’m calling…” [completes first verse]

Wife: Keep going

Alexa: “Hmm, I’m not sure about that.”

——————————————-

AI has made incredible recent progress, but voice assistants are still plagued by the rigidity and lack of contextual awareness they launched with years ago. For most, voice remains an interface of last resort, reserved for occasionally pulling up directions or playing a song on a smart speaker.

With LLMs capable of nuanced and aware conversations, why are we having so much trouble making everyday language-based products more useful?

Agentic Systems

To understand why voice assistants often stumble, let’s take a step back and look at agentic systems more generally.

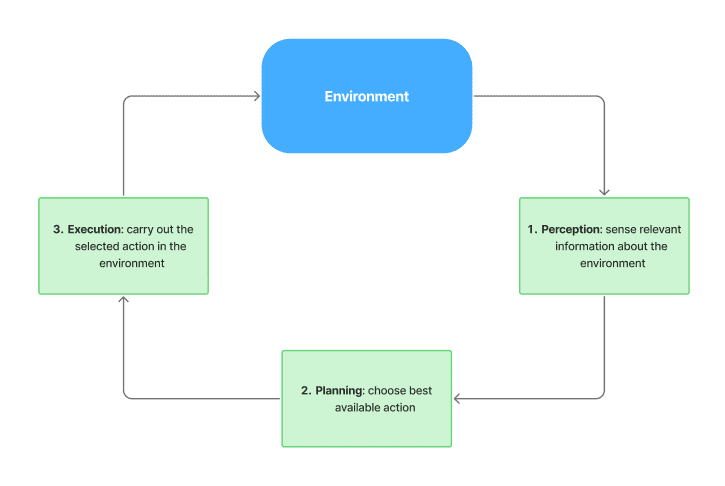

Automated systems taking actions on behalf of users interact with the world in three steps:

- Perception: understand the slice of the world relevant to our system

- Planning: enumerate the relevant decision space and select the correct action

- Execution: properly carry out the action chosen in the planning step

For a simple feedback loop, these steps might be trivial: if the temperature reading is too hot (perception), switch off the power (planning and execution).

For a more sophisticated system, like an autonomous vehicle, things are more challenging. The slice of the world to understand is broader: where we are in space relative to other objects, relevant signs and lane lines, the state of traffic lights, the local road laws, etc. Our available action space is large and has more dimensions: we can accelerate or break, continue straight or swerve, turn on our blinker, etc. To execute our actions, we need to communicate with the car’s control systems quickly and accurately.

A typical voice assistant also operates in a complex environment. It needs to understand what a user said and then choose and execute an action from a set of available integrations and APIs. There are challenges at each step: picking out the right voice from the background noise, parsing accents correctly, tracking active integrations, choosing the right action, etc.

Improvements with AI

AI is enabling progress in automated systems, especially in the perception and planning phases.

On the perception front, AI gives our systems a more flexible and nuanced understanding of the world. Computer vision models, like the ones we work on at Matroid, are increasingly capable of detecting more things in more contexts. LLMs should lend voice assistants near human-level language fluency and comprehension.

We’ve also seen impressive progress in planning. Systems can choose correct actions without explicitly programming logic for every possible scenario. A Waymo can navigate the world with steering and acceleration inputs that are smoother and more accurate than most human drivers. If you give some API documentation and a few examples to an LLM, it can often construct a correct API request for a simple task.

However, there are still limitations: parts of an environment might be too complex or nuanced for a perception model to understand. A voice assistant can only pull so much signal out of a noisy room.

Additionally, complex decision spaces make planning difficult. When using LLMs to do complex stuff with access to sprawling APIs, it’s often easier to first build a layer of abstraction the LLM can target. Reducing hundreds of API endpoints into tens of composable logical blocks greatly condenses the decision space an LLM-based agent must consider, increasing the odds of success.

Execution Challenges

Unlike perception and planning, execution is largely still dependent on existing systems.

LLMs are proving themselves capable of tasks like building simple web pages or analyzing data. Rapid progress for use cases like these is possible because we already have the execution part down: we were already really good at executing code cheaply in different environments before LLMs came around.

But if tasks extend beyond the digital world – running code, making API calls, automating browser sessions, etc – then we need to first build integrations between our agentic systems and hardware. It wouldn’t matter if AI could generate instructions to fold my laundry because we don’t have a common hardware platform that could carry out those instructions.

Voice assistant execution is inherently limited to digital tasks where they have access to proper integrations. Improved perception and planning will help them navigate more advanced integrations and take on more complex tasks, but building more integrations is a prerequisite for progress.

Trust Issues

Even when a voice assistant can handle a task, there’s another adoption barrier: we might not trust it to do a good job.

Consider booking a flight, a purely digital task a voice assistant or chatbot could probably handle.

When I choose an itinerary on Kayak, it’s based on some blend of price, trip duration, flight timing, airline, and potential rewards use or accrual. It’s a fairly complicated heuristic that would be tricky to accurately describe, and my ultimate choice usually differs from Kayak’s recommended best choice. The stakes are high: a mistake here could cost me hundreds of dollars or make for a miserable travel day.

Ordering food or making a dinner reservation poses similar scenarios – I might be able to pick out details on the menu or in pictures that a model might miss.

Information-rich aggregators and review sites are still great interfaces for these types of tasks. However, until automated assistants can prove that they will reliably make the same decisions we would, many users will continue to eschew chat and voice for the apps and sites we’re used to.

Navigating Heterogeneous Environments

Another core challenge for AI assistants is that the world they must navigate is a messy one.

Revisiting my home voice assistant is illustrative: I’ve got Alexa running on a set of Sonos speakers. Four different music services are connected through the Alexa app, and an additional three are connected through the Sonos app, including two separate Spotify accounts.

When I ask Alexa to play music, it has to navigate this mess, somehow selecting one of these services, figuring out the song or playlist that matches my request, and then talking to the API of a speaker made by a company that has publicly apologized for the quality of its software. And this isn’t even a particularly complicated setup by the standards of home entertainment systems.

Voice assistants are as ubiquitous as cell phones, but for each user, the set of available integrations, hardware, and accessible data is slightly different. It’s a minor miracle that they work at all.

Yet, after getting used to mostly accurate and nuanced conversations with LLMs, how could we not expect more? My entire chat history with Alexa could fit into the context window of a modern LLM, but there’s no evidence of any contextual awareness. I frequently have to repeat myself or rephrase things to get the right result.

It isn’t particularly surprising to see failures like the Rabbit R1 from companies new to the assistant space. But even the giants like Apple – a company with a legendary product pedigree that can tightly control the environment its voice assistant operates in – can’t quite figure it out: tepid reviews for Siri’s new Apple Intelligence features only underscore the complexity of the challenges.

Of course, just because we could enable context for voice assistants or let them step outside a narrow set of pre-defined actions doesn’t mean that we should. Potential unintended consequences abound: contextual awareness could easily become a vector for data leaks, and making untested API calls could wreak all sorts of havoc for users. Heterogeneous deployment environments exacerbate the challenges: it’s a vast surface area against which to test new features safely.

Automated Systems with Computer Vision

So, how do voice assistants relate to the work we’re doing at Matroid? We’re not in the business of assistants, but we work on making automated systems more useful in diverse environments.

While voice assistants struggle with the messiness of varied home setups, at Matroid we need to deal with the reality of modern industrial environments. There’s a lack of industry standardization, and the complexity of IT systems with generations of legacy components and Byzantine network architectures quickly dwarfs that of any home entertainment system.

Our product largely focuses on perception, enabling our customers to detect safety and quality issues. But we recognize that the best solutions are complete ones, so we’ve also built a number of features to flexibly integrate into diverse environments: customizable APIs, PLC integrations, physical device integrations, and support for human-in-the-loop workflows make it possible for our customers to make decisions and take action with computer vision.

Deployments will become simpler as AI tooling becomes standard in industrial settings. In the meantime, our talented solutions team works closely with customers to design the hardware footprints and integration points necessary to be successful in any environment. It’s this blend of flexible product features and a semi-tailored approach that enables our customers to maintain quality standards, maximize efficiency, and keep their workers safe.

Looking Forward

Voice assistants will undoubtedly become more accurate and useful as AI improves. Context awareness, hybrid visual / voice input, and more useful actions are likely on the roadmaps for most of the teams working in the space.

However, progress in voice assistants and automated systems will depend on more than just model advancements; it will require engineering systems and platforms AI can interact with across a broader range of tasks, bridging the gap between understanding and execution.

Until we see that progress? I’ll probably just pull out my phone to pick the dinner music.

About the Author

John Goddard is the Director of Product Engineering at Matroid. When he’s not writing code, you can usually find him on a rock wall, at the table with some overly complicated board game, or at a local playground with his toddler.

Building Custom Computer Vision Models with Matroid

Dive into the world of personalized computer vision models with Matroid's comprehensive guide – click to download today